Vonage Media Processor

Intro

Vonage media processor is a helper library for web developers that want to implement insertable streams (a.k.a Breakout box) on chrome based browsers using any Vonage JS SDK (voice and video).

Insertable Streams

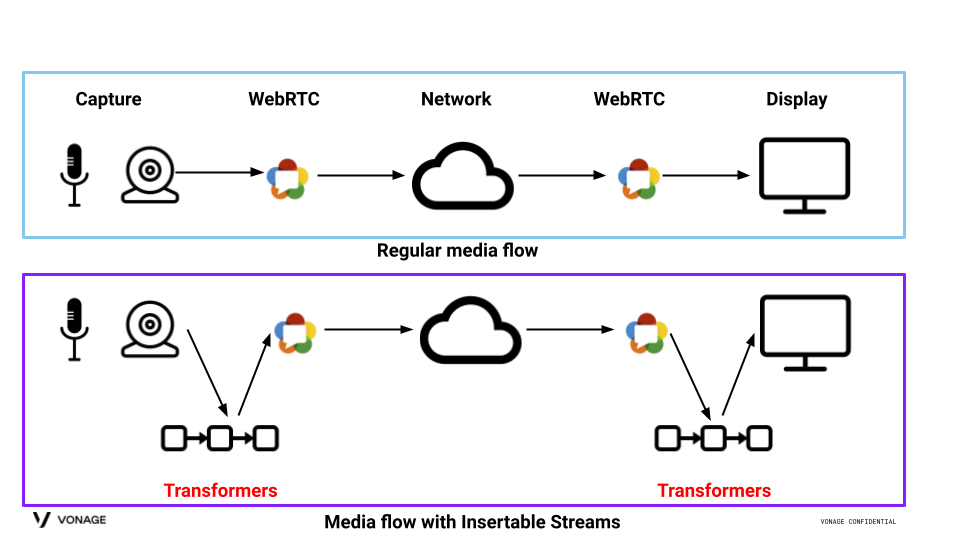

Insertable streams (aka Breakout Box) allow developers to get access to the raw media data (audio data and video frames) before it gets encoded by WebRTC and after it gets decoded by WebRTC by using the TransformStream Transformers API. By using media processor you can use as many transformers as you like.

As you can see in the image above media from the camera/microphone is getting inside WebRTC directly when following the regular flow.

When using insertable streams media will go through the transformers (your code) before it gets into WebRTC or before it gets into the renderer process. In those transformers you can do anything you want with the data, change it, take some inputs from it etc. Once you are done in one transformer you pipe the data to your next transformer if that exists, and if not it will go into WebRTC or the renderer process.

The advantages of using insertable streams are:

- Allows the processing to be specified by the user, not the browser.

- Allows the processed data to be handled by the browser as if it came through the normal pipeline.

- Allows the use of techniques like WASM to achieve effective processing.

- Allows the use of techniques like Web workers to avoid blocking on the application main thread.

- It does not negatively impact on security or privacy of current communications.

More information can be found here.

Sample applications

Sample applications can be found here.

Library usage

In this sample we will use OT.Publisher API for the integration of this library.

Create Transformer

class SimpleTransformer implements Transformer {

startCanvas_: OffscreenCanvas;

startCtx_: CanvasRenderingContext2D;

message_: string;

constructor(message: string) {

this.startCanvas_ = new OffscreenCanvas(1, 1);

this.startCtx_ = this.startCanvas_.getContext("2d");

this.message_ = message;

if (!this.startCtx_) {

throw new Error("Unable to create CanvasRenderingContext2D");

}

}

//start function is optional.

start(controller: TransformStreamDefaultController) {

//In this sample nothing needs to be done.

}

//transform function must be implemented.

transform(frame: any, controller: TransformStreamDefaultController) {

this.startCanvas_.width = frame.displayWidth;

this.startCanvas_.height = frame.displayHeight;

let timestamp: number = frame.timestamp;

this.startCtx_.drawImage(

frame,

0,

0,

frame.displayWidth,

frame.displayHeight

);

this.startCtx_.font = "30px Arial";

this.startCtx_.fillStyle = "black";

this.startCtx_.fillText(this.message_, 50, 150);

frame.close();

controller.enqueue(

new VideoFrame(this.startCanvas_, { timestamp, alpha: "discard" })

);

}

//flush function is optional.

flush(controller: TransformStreamDefaultController) {

//In this sample nothing needs to be done.

}

}

export default SimpleTransformer;

Using the transformer on the application main thread

Main code:

...

import { MediaProcessor, MediaProcessorConnector } from '@vonage/media-processor';

...

const transformer1: SimpleTransformer = new SimpleTransformer("hello")

const transformer2: SimpleTransformer = new SimpleTransformer("world")

const mediaProcessor: MediaProcessor = new MediaProcessor()

const transformers = [ transformer1, transformer2]

mediaProcessor.setTransformers(transformers)

const connector: MediaProcessorConnector = new MediaProcessorConnector(mediaProcessor)

...

publisher.setVideoMediaProcessorConnector(connector)

...

- Create two transformer instances. One will print hello and the other one will print world.

- Create a new MediaProcessor instance (e.g.

new MediaProcessor()). - Set the array of transformers previously created to the MediaProcessor instance (e.g.

mediaProcessor.setTransformers(transformers)). - Create MediaProcessorConnector instance by using the MediaProcessor instance previously created (e.g.

new MediaProcessorConnector(mediaProcessor)). - The final step would be setting the MediaProcessorConnector instance to one of Vonage SDKs (in this example we used OT.Publisher) (e.g.

publisher.setVideoMediaProcessorConnector(connector)).

Using the transformer on a Web worker thread

Using the library along with Web workers represents the best combination in terms of performance. Worker helper class (it will run on the application main thread):

MediaProcessor bridge code:

class MediaProcessorHelperWorker implements MediaProcessorInterface{

worker_: any

const workerUrl_: string = "https://some-worker.com/worker.js"

constructor() {

this.worker_ = new Worker(this.workerUrl_);

this.worker_.addEventListener('message', ((msg: any) => {

if(msg.data.message === 'transform'){

...

} else if(msg.data.message === 'destroy'){

...

//release the worker!!!

this.worker_.terminate()

}

}))

}

//override function

transform(readable, writable)

{

this.worker_.postMessage({

operation: 'transform',

readable,

writable},

[readable, writable]);

}

//override function

destroy() {

this.worker_.postMessage({

operation: 'destroy'});

}

}

Web worker code:

...

import { MediaProcessor, MediaProcessorConnector } from '@vonage/media-processor';

...

const transformer1: SimpleTransformer = new SimpleTransformer("hello")

const transformer2: SimpleTransformer = new SimpleTransformer("world")

const mediaProcessor: MediaProcessor = new MediaProcessor()

const transformers = [ transformer1, transformer2]

mediaProcessor.setTransformers(transformers)

onmessage = async (event) => {

const { operation } = event.data;

switch (operation) {

case 'transform':

mediaProcessor.transform(readable, writable).then(() => {

const msg = {callbackType: 'success', message: 'transform'};

postMessage(JSON.stringify(msg));

})

break;

case 'destroy':

const msg = {callbackType: 'success', message: 'destroy'};

postMessage(JSON.stringify(msg));

break;

}

}

Main code:

...

const mediaProcessor = new MediaProcessorHelperWorker(worker);

const connector = new MediaProcessorConnector(mediaProcessor);

...

publisher.setVideoMediaProcessorConnector(connector)

The main difference between running these bits on the application main thread or on a Web worker is that you should provide the bridging bits between the application main thread and Web worker. This bridge class should implement the MediaProcessorInterface interface.

In case of wondering why the library does not provide an easy and simpler way to implement or support this communication please note that this is application specific so the library can not help there as it depends on the use case implemented.

It is very important that the mediaProcessor and the Transformers instances must be created on the Web worker and not on the application main thread.

Errors, Warnings and Statistics

isSupported

This is a check to make sure that the insertable streams API is supported by the browser. (supported browsers).

try {

await isSupported();

} catch (e) {

console.error(e);

}

Errors and Warnings Listener

mediaProcessor instances include Emittery notifications.

mediaProcessor.on("error", (eventData: ErrorData) => {

console.error(eventData);

});

mediaProcessor.on("warn", (eventData: WarnData) => {

console.warn(eventData);

});

mediaProcessor.on("pipelineInfo", (eventData: PipelineInfoData) => {

console.info(eventData);

});

Statistics

The API collects statistics for usage and debugging purposes. However, it is up to the developer to activate it.

Turn statistics on:

const metadata: VonageMetadata = {

appId: "vonage app id",

sourceType: "video" | "voice",

proxyUrl: "https://some-proxy.com", //optional

};

setVonageMetadata(metadata);

Turn statistics off: (by default the statistics are off)

setVonageMetadata(null);